Moltworker: The Self-Hosted AI Agent Revolution (Without the Hardware)

The dream of a truly personal AI agent—one that knows you, remembers your context, and operates autonomously—has often come with a heavy physical tax: the need for a dedicated local server. For years, running a “sovereign” agent meant keeping a Mac Mini or a gaming PC humming in the corner, managing Docker containers, and fussing with port forwarding.

That era is ending. With the introduction of Moltworker, Cloudflare has demonstrated how to move the “personal” AI agent entirely to the edge, maintaining privacy and control without the hardware headache.

Key Takeaways

- No Hardware Required: Run a fully autonomous agent (Moltbot) on Cloudflare’s serverless stack.

- Enterprise-Grade Security: Protect your agent’s interface with Zero Trust Access.

- Persistent Memory: Use R2 object storage to give your agent long-term recall.

- Cost Efficiency: Deploy for as little as $5/mo using Cloudflare Workers Paid plan.

The Hardware Bottleneck

For privacy-conscious developers, “local” has been the gold standard. Running an agent on your own metal ensures no one else is snooping on your data. But this approach scales poorly. It requires maintenance, consumes electricity, and limits your agent’s availability to the uptime of your home internet connection.

Cloudflare’s new reference implementation, Moltworker, flips this script. By adapting the open-source Moltbot (formerly Clawdbot) to run on Cloudflare Workers, we can now achieve the best of both worlds: the sovereignty of self-hosting with the scalability of the cloud.

Enter Moltworker: A Serverless Agent Architecture

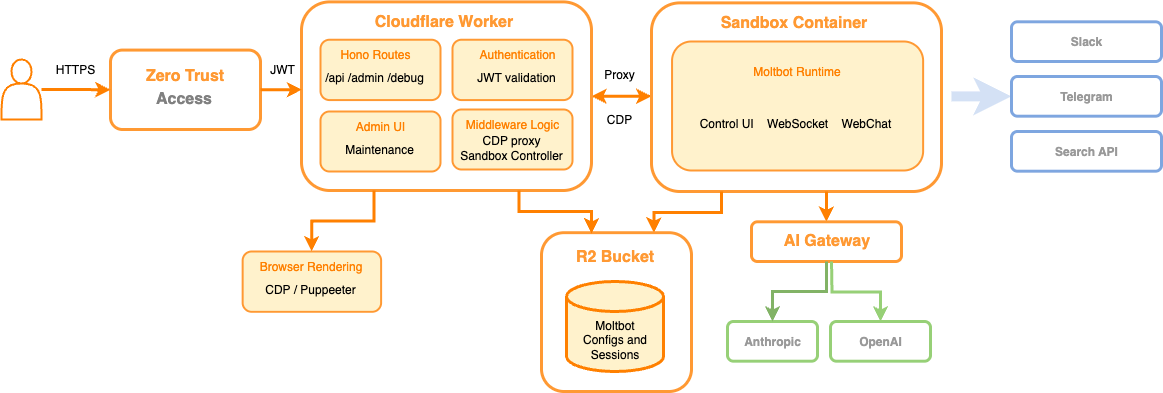

Moltworker isn’t just a script; it’s a micro-orchestration of several powerful cloud primitives. Instead of a monolithic Docker container running on a desktop, the agent is deconstructed into specialized serverless components.

The Anatomy of a Cloud Agent

-

The Brain (Sandboxes): At the core is the Cloudflare Sandbox, an isolated environment that runs the Moltbot runtime. This replaces the local Docker container, executing untrusted code securely and providing the computation power needed for the agent’s logic.

-

The Nervous System (Workers): A standard Cloudflare Worker acts as the API router and proxy. It handles incoming requests, routes them to the Sandbox, and manages the flow of data.

-

The Memory (R2): Persistence is handled by R2, Cloudflare’s object storage. Unlike ephemeral containers that wipe data on restart, R2 allows the agent to mount a bucket as a filesystem, saving conversation history and “memories” permanently.

-

The Eyes and Hands (Browser Rendering): When the agent need to browse the web—to check flight prices or read documentation—it doesn’t spawn a heavy local browser. Instead, it calls Cloudflare’s Browser Rendering API (Puppeteer), allowing it to interact with the web efficiently and securely.

Why This Matters for Business

The implications extend far beyond hobbyist projects. This architecture outlines a blueprint for enterprise AI deployment.

By leveraging Zero Trust Access, organizations can deploy internal research agents that are accessible only to authenticated employees, without exposing any attack surface to the public internet. The integration of AI Gateway adds a layer of observability and control, allowing teams to monitor token usage, manage costs across different providers (like Anthropic), and cache responses to reduce latency.

As we’ve explored in our analysis of the Agentic AI Revolution, the shift from chatbots to autonomous agents is the defining trend of this cycle. Moltworker proves that the infrastructure to support this shift is ready today. It aligns perfectly with the move towards specialized micro-services, similar to what we see with Cloudflare MCP Servers.

Final Thoughts

Moltworker represents a maturing of the AI ecosystem. We are moving away from hacked-together local solutions towards robust, cloud-native architectures that respect privacy and sovereignty.

Whether you are a developer looking to build a personal assistant or a CTO planning an internal agent workforce, the serverless approach offers a compelling path forward. You can check out the source code and deploy your own instance via the Moltworker GitHub repository and read the full technical deep dive on the Cloudflare Blog.

The future of AI agents isn’t on your desk—it’s everywhere.