Kimi K2.5: The Visual Agentic Swarm Revolution

The era of the solitary chatbot is ending. Kimi K2.5 has arrived, and it brought a swarm.

With the release of Kimi K2.5 just two days ago, Moonshot AI has pushed the boundaries of what open-source models can achieve, specifically targeting the complex intersection of vision, coding, and autonomous agent orchestration. This isn’t just another incremental update; it’s a shift towards “Visual Agentic Intelligence” that fundamentally changes how we interact with AI for complex tasks.

Key Takeaways

- Visual Coding Powerhouse: Native multimodal pretraining allows K2.5 to turn videos and images directly into functional code, excelling in front-end development.

- Agent Swarm Architecture: The model can self-direct up to 100 sub-agents to execute 1,500 parallel steps, drastically reducing execution time for complex workflows.

- Parallel Reinforcement Learning: A new training paradigm (PARL) prevents the common “serial collapse” of agent systems, ensuring true parallel execution.

- Open Access: Available now via API and the new Kimi Code terminal tool.

Beyond Text: Visual Agentic Intelligence

Most “multimodal” models today still treat vision as a secondary sense—processing an image and then switching back to text-based reasoning. Kimi K2.5 was pretrained on approximately 15 trillion mixed visual and text tokens, making it a native multimodal model.

This is a game-changer for software development. As we explored in our analysis of AI Code Assistants, the future of coding is increasingly visual. K2.5 can ingest a video of a UI interaction and reconstruct the website, complete with animations and layout logic. It doesn’t just “see” the image; it reasons about the user intent behind the pixels. This capability bridges the gap between design and implementation, allowing for a more fluid workflow where “visual debugging” becomes a reality.

The Power of the Swarm: Scaling Out, Not Just Up

Perhaps the most significant breakthrough in K2.5 is its Agent Swarm capability. Traditional agentic systems often suffer from latency as they execute tasks sequentially—one step after another. Kimi K2.5 breaks this bottleneck.

Using a technique called Parallel-Agent Reinforcement Learning (PARL), the model learns to decompose massive tasks into parallelizable chunks. It acts as an orchestrator, spinning up specialized sub-agents (e.g., a “Physics Researcher,” “Fact Checker,” or “Code Reviewer”) to work simultaneously. This “scaling out” approach is critical for the Rise of Agentic AI, where the bottleneck is no longer intelligence, but throughput and coordination.

In internal benchmarks, this swarm architecture reduced end-to-end runtime by up to 80% for complex tasks compared to single-agent setups. It effectively continuously optimizes the “critical path” of a project, ensuring that creating more agents actually leads to faster results rather than just more noise.

Real-World Productivity and Benchmarks

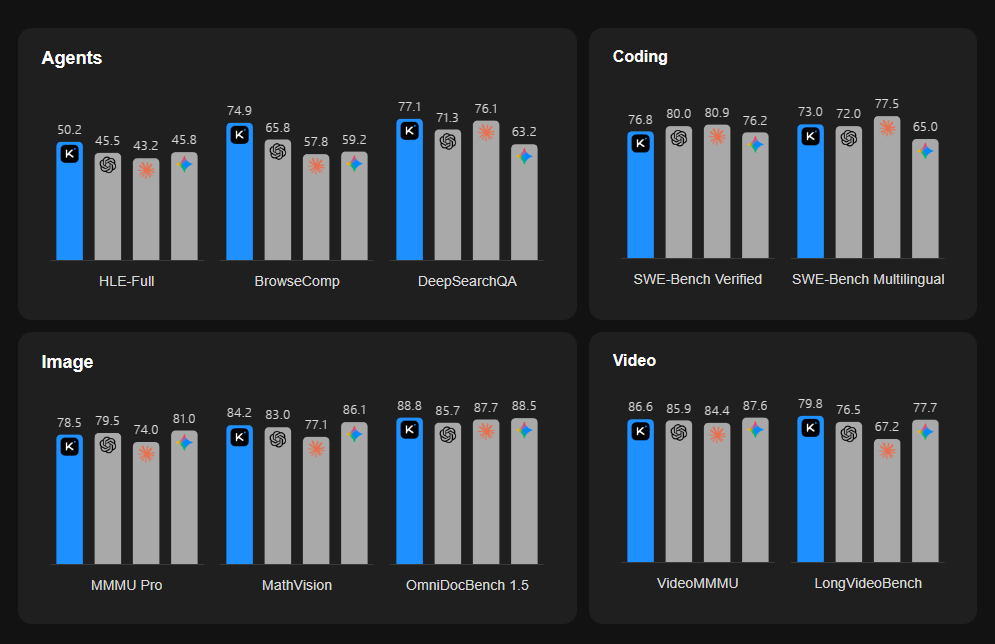

The theoretical capabilities of swarms are impressive, but Kimi K2.5 backs them up with strong performance on standard benchmarks like HLE, BrowseComp, and SWE-Verified.

The benchmark results above highlight K2.5’s competitive standing against other leading models, particularly in agentic and coding tasks.

For enterprise users and developers, this translates to tangible productivity gains. Whether it’s analyzing 100-page documents, constructing financial models, or automating complex procurement workflows (a topic we touched on in Agentic AI Unleashed), K2.5 is designed to handle the “grind” of knowledge work.

Final Thoughts

Kimi K2.5 represents a maturity point for open-source AI. It moves us away from the “one model does it all” mentality toward a more nuanced, architectural approach where the model is the manager of a digital workforce.

For developers, the immediate next step is to explore Kimi Code, which integrates these capabilities directly into the terminal and IDEs. As we continue to see models like K2.5 evolve, the line between “using an AI” and “hiring an AI team” will continue to blur.

Sources: Kimi K2.5 Technical Report, Moonshot AI Platform.