Multimodal AI: The New Frontier of Intelligence

Understanding the Multimodal AI Revolution

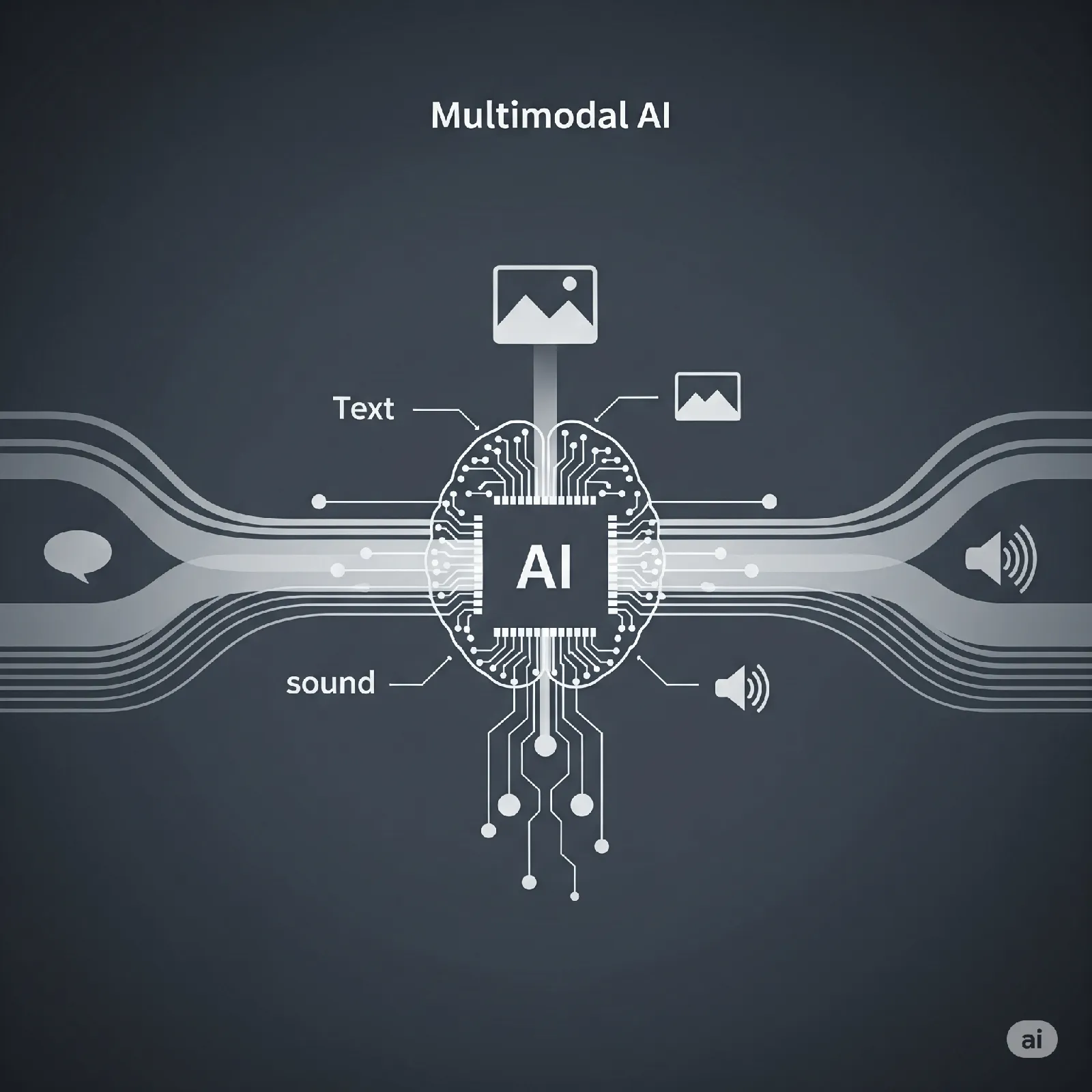

The world of Artificial Intelligence is no longer silent, nor is it blind. We’re stepping into an era where AI comprehends the world more like humans do – by processing a rich tapestry of information, not just text or images in isolation. This is the dawn of Multimodal AI, a groundbreaking approach where systems are trained to understand, interpret, and generate content from multiple types of data inputs simultaneously, such as voice, visuals, text, and even sensor data. This isn’t just an incremental update; it’s a fundamental shift in the future of artificial intelligence, promising to unlock unprecedented capabilities and redefine how AI in business operates and how we interact with technology daily.

Why Multimodal AI is a Game-Changer

Traditional AI models were often specialists, excelling in one domain – a natural language processing model for text, or a computer vision model for images. Multimodal AI breaks down these silos. Imagine an AI that can watch a video, listen to the audio, read on-screen text, and then provide a comprehensive summary or answer nuanced questions about the content. This holistic understanding is the core strength of multimodal systems.

Key advantages include:

- Richer Contextual Understanding: By integrating data from various sources, these AI systems gain a deeper, more nuanced understanding of the subject matter. This is crucial for complex AI applications where context is everything.

- Enhanced Human-AI Interaction: Communication becomes more natural. Users can interact with AI using voice, gestures, and text, leading to more intuitive and accessible experiences.

- New Problem-Solving Capabilities: Problems previously too complex for single-modal AI become solvable. Think of diagnosing diseases by correlating medical images with patient history and doctor’s notes, or creating truly immersive educational content.

Real-World Impacts: Multimodal AI in Action

The applications of Multimodal AI are already beginning to reshape industries:

- Healthcare: AI systems are assisting doctors by analyzing medical images (X-rays, MRIs) alongside patient records and even real-time data from wearable sensors to improve diagnostic accuracy.

- Automotive: Advanced driver-assistance systems (ADAS) use cameras, LiDAR, radar, and even in-cabin audio processing to understand the vehicle’s environment and driver state, enhancing safety.

- Customer Experience: Businesses are leveraging Multimodal AI to create sophisticated chatbots and virtual assistants that can understand customer queries whether typed or spoken, and even analyze sentiment from tone of voice or facial expressions (with user consent).

- Content Creation and Accessibility: From generating detailed image descriptions for the visually impaired to creating dynamic presentations from text prompts and data, Multimodal AI is democratizing content creation. This also impacts AI in business by streamlining marketing and communication workflows.

The Road Ahead: Challenges and Opportunities

While the potential of Multimodal AI is immense, there are challenges to address. Training these complex models requires vast, high-quality datasets covering multiple modalities. Ensuring fairness, mitigating bias that might creep in from diverse data sources, and maintaining data privacy are critical considerations.

However, the momentum is undeniable. As research progresses and computational power increases, we can expect the future of artificial intelligence to be increasingly multimodal. For businesses, this means developing a robust enterprise adoption strategy to unlock new avenues for innovation, efficiency, and creating more engaging customer experiences. For individuals, it promises a future where technology understands us better and assists us in more meaningful ways. The journey of Multimodal AI is just beginning, and it’s set to be a transformative one.